Podpresets in Kubernetes are a cool new addition to container orchestration in v1.7 as an alpha capability. At first they seem relatively simple but when I began to realize their current AND potential value, I came up with all kinds of potential use cases.

Basically Podpresets inject configuration into pods for any pod using a specific Kubernetes label. So what does this mean? Have a damn good labeling strategy. This configuration can come in the form of:

- Environment variables

- Config Maps

- Secrets

- Volumes/Volumes Mounts

Everything in a PodPreset configuration will be appended to the pod spec unless there is a conflict, in which case the pod spec wins.

Benefits:

- Reusable config across anything with the same service type (datastores as an example)

- Simplify Pod Spec

- Pod author can simply include PodPreset through labels

Example Use Case: What IF data stores could be configured with environment variables. I know, wishful thinking….but we can work around this. Then we could setup a PodPreset for MySQL/MariaDB to expose port 3306, configure for InnoDB storage engine and other generic config for all MySQL servers that get provisioned on the cluster.

Generic MySQL Pod Spec:

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

app: mysql-server

preset: mysql-db-preset

spec:

containers:

- name: mysql

image: mysql:8.0

command: ["mysqld"]

initContainers:

- name: init-mysql

image: initmysql

command: ['script.sh']

Now notice there is an init container in the pod spec. Thus no modification of the official MySQL image should be required.

The script executed in the init container could be written to templatize the MySQL my.ini file prior to starting mysqld. It may look something like this.

#!/bin/bash cat >/etc/mysql/my.ini <<EOF [mysqld] # Connection and Thread variables port = $MYSQL_DB_PORT socket = $SOCKET_FILE # Use mysqld.sock on Ubuntu, conflicts with AppArmor otherwise basedir = $MYSQL_BASE_DIR datadir = $MYSQL_DATA_DIR tmpdir = /tmp max_allowed_packet = 16M default_storage_engine = $MYSQL_ENGINE ... EOF

Corresponding PodPreset:

kind: PodPreset

apiVersion: settings.k8s.io/v1alpha1

metadata:

name: mysql-db-preset

namespace: somenamespace

spec:

selector:

matchLabels:

preset: mysql

env:

- name: MYSQL_DB_PORT

value: "3306"

- name: SOCKET_FILE

value: "/var/run/mysql.sock"

- name: MYSQL_DATA_DIR

value: "/data"

- name: MYSQL_ENGINE

value: "innodb"

This was a fairly simple example of how MySQL servers might be implemented using PodPresets but hopefully you can begin to see how PodPresets can abstract away much of the complex configuration.

More ideas –

Standardized Log configuration – Many large enterprises would like to have a logging standard. Say something simply like all logs in JSON and formatted as key:value pairs. So what if we simply included that as configuration via PodPresets?

Default Metrics – Default metrics per language depending on the monitoring platform used? Example: exposing a default set of metrics for Prometheus and just bake it in through config.

I see PodPresets being expanded rapidly in the future. Some possibilities might include:

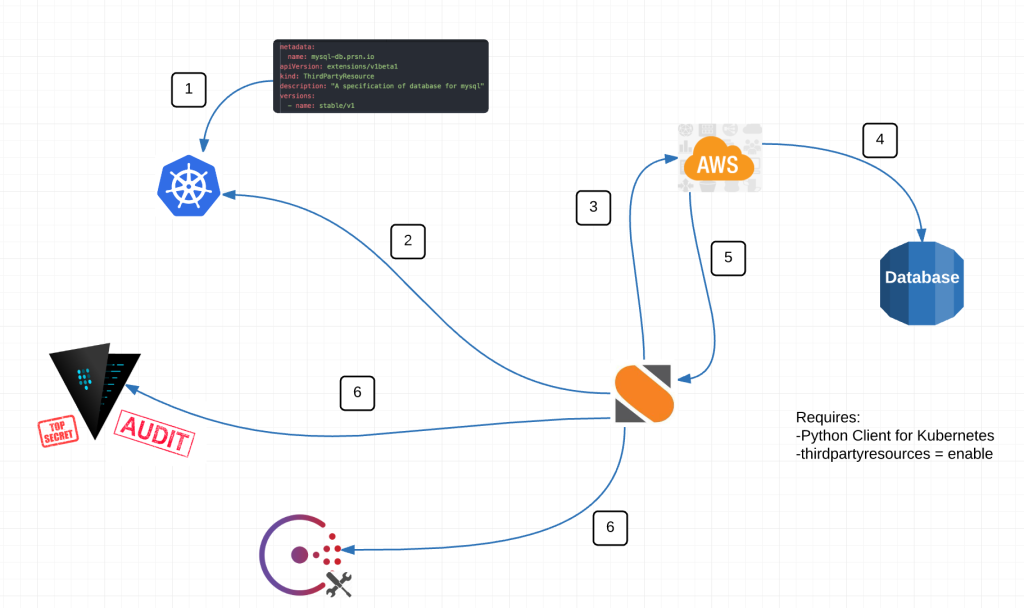

- Integration with alternative Key/Value stores

- Our team runs Consul (Hashicorp) to share/coordinate config, DNS and Service Discovery between container and virtual machine resources. It would be awesome to not have to bake in envconsul or consul agent to our docker images.

- Configuration injection from Cloud Providers

- Secrets injection from alternate secrets management stores

- A very similar pattern for us with Vault as we use for Consul. One single Secrets/Cert Management store for container and virtual machine resources.

- Cert injection

- Init containers

- What if Init containers could be defined in PodPresets?

I’m sure there are a ton more ways PodPresets could be used. I look forward to seeing this progress as it matures.

@devoperandi