In the last several months various deployment (CI/CD) pipelines have cropped up within the Kubernetes community and our team also released one at KubeCon Seattle 2016. As a result I’ve been asked on a couple different occasions why we built our own. So here is my take.

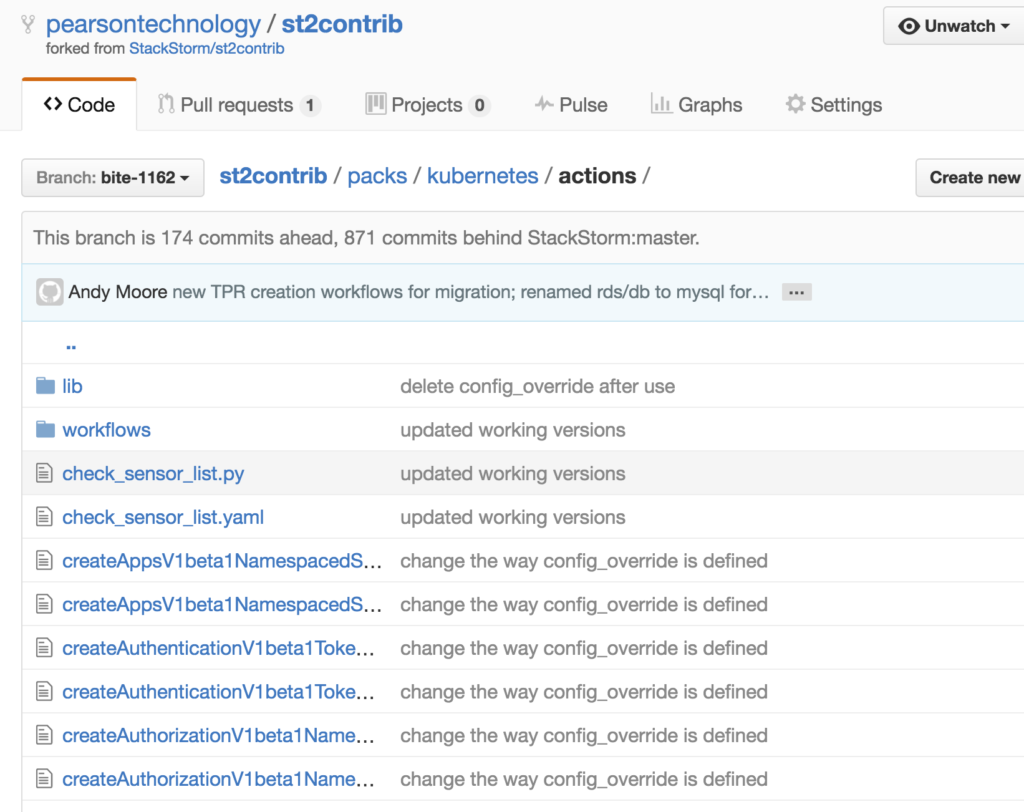

We began this endeavor sometime late 2015. You can see our initial commit is on Feb 27th, 2016 to Github. But if you look closer, you will notice its a very large commit. Some 806 changes. This is because we began this project quite a bit before this time. So what does this mean? Nothing other than we weren’t aware of any other CD pipeline projects at the time and we needed one. So we took it upon ourselves to create one. You can see my slides at KubeCon London March 2016 where I talk about it a fair amount.

My goal of this blog is not to persuade you of one particular CD pipeline or another. I simply don’t care beyond the fact that contributions to Pearson’s CD pipeline would mean we can make it better faster. Beyond this we get nothing out of it.

Release it as Open Source –

We chose to release our project as open source for the community to consume at Kubecon Seattle 2016. To share our thoughts and experience on the topic, offer another option for the community to consume and provide insight to how we prefer to build/deploy/test/manage our environments.

Is it perfect? No.

Is it great? Yes, in my opinion its pretty great.

Does it have its own pros AND cons? hell yes it does.

Lets dig in, shall we?

There are two projects I’m aware of that claim many of the same capabilities. It is NOT my duty to explain all of them in detail but rather point out what I see as pros/cons/differences (which often correlate to different modes of thought) between them.

I will be happy to modify this blog post if/when I agree with a particular argument so feel free to add comments.

Fabric8 CD Pipeline – (Fabric8)

https://fabric8.io/guide/cdelivery.html

The Fabric8 CD pipeline was purpose built for Kubernetes/OpenShift. It has some deeper integrations with external components that are primarily RedHat affiliated. Much of the documentation focuses on Java based platforms even though they remark they can integrate with many other languages.

Kubernetes/OpenShift

Capable of working out of the box with Kubernetes and OpenShift. My understanding is you can set this as a FABRIC8_PROFILE to enable one or the other.

Java/JBoss/RedHat as a Focus point

While they mention being able to work with multiple languages, their focus is very much Java. They have some deep integrations with Apache Camel and other tools around Java including JBoss Fuse.

Artifact Repository

The CD pipeline for Fabric8 requires Nexus, an artifact repository.

Gogs or Github

Gogs is required for on-prem git repository hosting. I’m not entirely sure why Gogs would matter if simply accessing a git repo but apparently it does. Or there is integration with github.

Code Quality

Based on the documentation Fabric8 appears to require SonarType for use around code quality. This is especially important if you are running a Java project as Fabric8 automatically recognizes and attempts to integrate them. SonarType can support a variety of languages based on your use-case.

Pipeline Librarys

Fabric8 has a list of libraries for reusable bits of code to build your pipeline from. https://github.com/fabric8io/fabric8-jenkinsfile-library. Unfortunately these libraries tend to have some requirements around SonarType and the like.

Multi-Tenant

I’m not entirely sure as of yet.

Documentation

Fabric8’s documentation is great but very focused on Java based applications. Very few examples include any other languages.

Pearson CD Pipeline – (Pearson)

https://github.com/pearsontechnology/deployment-pipeline-jenkins-plugin

Kubernetes Only

Pure Kubernetes integration. No OpenShift.

Repository Integration

git. Any git, anywhere, via ssh key.

Language agnostic

The pipeline is entirely agnostic to language or build tools. This CI/CD platform does not specify any deep integrations into other services. If you want it, specify the package desired through the yaml config files and use it. Pearson’s CD pipeline isn’t specific to any particular language because we have 400+ completely separate development teams who need to work with it.

Artifact Repository

The Pearson CD pipeline does not specify any particular Artifact repo. It does however use a local aptly repo for caching deb packages. However nothing prevents artifacts from being shipped off to anywhere you like through the build process.

Ubuntu centric (currently)

Currently the CD pipeline is very much Ubuntu centric. The project has a large desire to integrate with Alpine and other base images but we simply aren’t there yet. This would be an excellent time to ask our community for help. please?

Opinionated

Pearson’s CD pipeline is opinionated about how and the order in which build/test/deploy happens. The tools used to perform build and test however are up to you. This gives greater flexibility but places the onus on the team around their choice of build/test tools.

Code Quality

The Pearson CD pipeline performs everything as code. What this is means is all of your tests should exist as code in a repository. Then simply point Jenkins to that repo and let it rip. The pipeline will handle the rest including spinning off the necessary number of slaves to do the job.

Ease of Use

Pearson’s CD pipeline is simple once the components are understood. Configuration code is reduced to a minimum.

Scalability

The CD pipeline will automatically spin up Jenkins slaves for various work requirements. It doesn’t matter if there are 1 or 50 microservices, build/test/deploy is relatively fast.

Tenancy

Pearson’s CD pipeline is intended to be used as a pipeline per project. Or better put, a pipeline per development team as each Jenkins pipeline can manage multiple Kubernetes namespaces. Pearson divides dev,stg,prd environments by namespace.

Documentation

Well lets just say Pearson’s documentation on this subject matter are currently lacking. There are plenty of items we need to add and it will be coming soon.

Final Thoughts:

Fabric8 deployment pipeline

The plugin the fabric8 team have built for integrating with Kubernetes/OpenShift is awesome. In fact the Pearson deployment pipeline intends to take advantage of some of their work. Hence the greatness of the open source community. If you have used Jenkinsfile, this will feel familiar to you. The Fabric8 plugin is focused on what tools should be used (ie SonarType, Nexus, Gogs, Apache Camel, Jboss Fuse). This could be explained away as having deeper integration allowing for a seamless integration but I would argue that most of these have APIs and its not difficult to make a call out which would allow for a tool agnostic approach. They also have a very high degree of focus on Java applications which doesn’t lend itself to the rest of the dev ecosystem. As I mentioned above, they do state they can integrate with other languages but I’ve been unable to find good examples of this in documentation.

Note: I was unable to find documentation on how the Fabric8 deployment pipeline scales. If someone has this information readily available I would love to read/hear about it. Its quite possible I just missed it.

Provided Jenkinsfile is a known entity, Java centric is the norm and you already integrate with many of the tools Fabric8 provides, this is probably a great fit for your team. If you need to have control over the CI/CD process, Fabric8 could be a good fit for you.

Pearson deployment pipeline

This is an early open source project. There are limitations around Ubuntu which we intend to alleviate. We simply haven’t had the demand from our customers to prioritize it yet. **Plug the community getting involved here***. Pearson’s deployment pipeline is very flexible from the sense of what tools it can integrate with yet more deterministic as to how the CI/CD process should work. There is no limitation on language. The Pearson deployment pipeline is easy to get started with and highly scalable. Jenkins will simply scale the number of slaves it needs to perform. Because the deployment pipeline abstracts away much of the CI/CD process, the yaml configuration will not be familiar at first.

If you don’t know Jenkins and you really don’t want to know the depths of Jenkins, Pearson’s pipeline tool might be a good place to start. Its simple 3 yaml config files will reduce the amount of configuration you need to get started. I would posit it will be half as many lines of config to create your pipeline.

The Pearson Deployment Pipeline project needs better examples/templates on how to work with various languages

Note:

Please remember, this blog is at a single point in time. Both projects are moving, evolving and hopefully shaping the way we think about pipelines in a container world.

Key Considerations:

Fabric8

Integrates well with OpenShift and Kubernetes

Tight integrations with other tools like SonarType, Camel and ActiveMQ, Gog etc etc

Less focus on how the CI/CD pipeline should work

Java centric

Requires other Fabric8 projects to get full utility from it

Tenancy – I’m not entirely sure. I probably just missed this in the documentation.

Pearson

Kubernetes Only

All purpose CD pipeline

Language Agnostic

More opinionated about the build/test/deploy process

Highly Scalable

Tenant per dev team/project

Easy transition for developers to move between dev teams

More onus on teams to create their build artifacts